Accelerate your protein engineering. Download our free guide to cell display

The Numbers Game: A Practical Guide to Calculating and Validate Library Diversity with NGS

The success of any surface display campaign depends on the quality of your starting library. While Sanger sequencing provides a glimpse, it fails to reveal critical biases that can doom a screen before it begins. This technical guide details how to use Next-Generation Sequencing (NGS) to get a true, quantitative measure of your library's health. We cover the essential metrics—from valid read percentages to the overlooked impact of library uniformity—and provide practical workflows for analyzing both short and long diversified regions. Read on to learn how to de-risk your project and ensure you're screening a library built for success.

9/8/20255 min read

The Numbers Game: A Practical Guide to Calculating and Validate Library Diversity with NGS

In any surface display or directed evolution campaign, the quality of your starting library is the single most important predictor of success. A diverse, accurately synthesized library contains the raw material for discovery; a biased or poorly constructed one guarantees failure. While traditionally assessed by Sanger sequencing of a few dozen clones, this method offers a dangerously incomplete picture.

Next-Generation Sequencing (NGS) provides a deep, quantitative, and actionable assessment of library quality before you invest weeks of time and resources into a screening campaign. This guide provides a framework for the key metrics and practical steps to validate your library's diversity using NGS.

Why Sanger Sequencing is No Longer Enough

Sanger sequencing of 20, 40, or even 96 clones provides a qualitative snapshot at best. For a library with a theoretical diversity of millions, this is statistically insignificant. You might confirm that some diversity exists, but you have no quantitative insight into the two most critical parameters:

True Diversity: How many of your designed variants are actually present?

Distribution: Are the variants present at relatively uniform frequencies, or is your library dominated by a small number of "jackpot" sequences?

Relying on Sanger alone is like trying to survey a forest by looking at a single tree. You miss the big picture, and critical biases can go completely undetected until it's too late.

The Core Metrics of an NGS Library QC Analysis

A robust NGS analysis goes beyond simple read counting. It involves calculating specific metrics that together paint a comprehensive picture of library quality.

1. Valid Read Percentage (In-Frame and No-Stop) This is the most fundamental measure of synthesis quality. After sequencing, your bioinformatics pipeline should translate each DNA read into its corresponding amino acid sequence and calculate the percentage of reads that are:

In the correct reading frame.

Free of premature stop codons.

A high-quality library should have a valid read percentage of >70-80%. A low value suggests systemic issues in oligo synthesis or library construction that will severely hamper any downstream selection efforts.

2. Observed vs. Theoretical Diversity This metric directly assesses the complexity of your library.

Theoretical Diversity: The total number of unique variants you intended to create (e.g., for an NNK library at 5 positions, this is 32⁵ ≈ 33.5 million DNA sequences, or 20⁵ = 3.2 million amino acid sequences).

Observed Diversity: The number of unique valid sequences detected by NGS.

The ratio of Observed / Theoretical Diversity is a key performance indicator. While achieving 100% is unlikely for very large libraries, a high-quality library should cover a significant fraction of its designed sequence space.

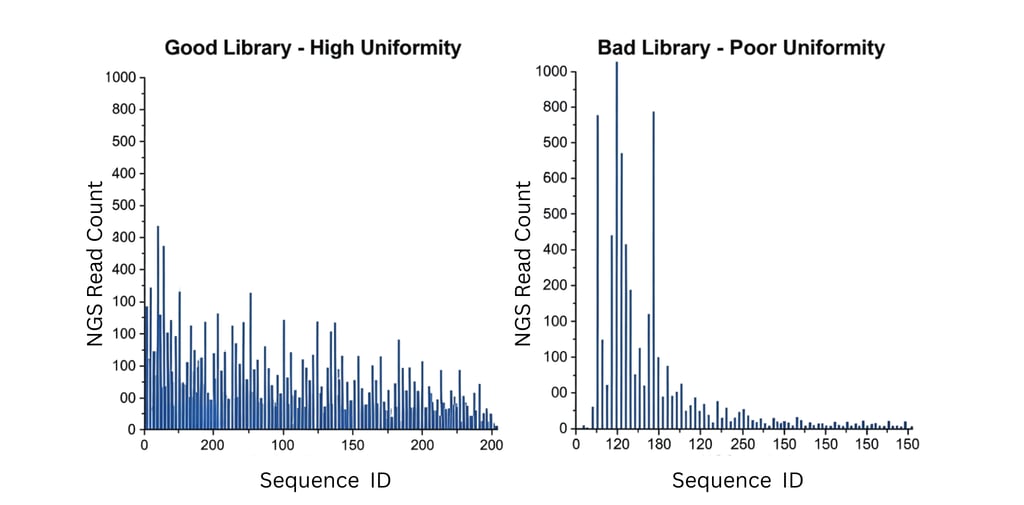

3. Library Uniformity: The Impact of Distribution Bias This is arguably the most powerful—and most overlooked—metric. It measures the evenness of the distribution of variants. A library is not truly diverse if 90% of the population consists of only 10% of the unique variants. This distribution bias, often caused by issues in oligo synthesis or PCR amplification, can fatally undermine a screen.

Let's use a concrete example. Imagine a library with a theoretical diversity of 1,000,000 unique variants.

A Good, Uniform Library: Ideally, all 1,000,000 variants are present at a similar frequency. If you sequence 10 million total molecules, each unique variant would be represented by approximately 10 reads. This gives every variant a fair chance to be selected based on its fitness.

A Poor, Skewed Library: Now, consider the impact if your library is biased:

Sub-optimal: If 90% of your total molecules represent only 50% of your unique variants (500,000), your screen is heavily biased. You're effectively screening half a library, and better hits in the underrepresented half may be missed.

Poor: If 90% of your molecules represent only 30% of your unique variants (300,000), the bias becomes severe. You are likely just re-screening the same common variants over and over, wasting valuable sorting time.

Critical Failure: If 90% of your molecules represent a mere 10% of your unique variants (100,000), the library is functionally useless. The vast majority of your designed diversity is absent, and any "hits" you find will be artifacts of this extreme initial bias, not true binders.

How This Bias Destroys a Screen:

Wasted Effort: In the "Critical Failure" scenario, 90% of your screening effort is spent re-evaluating the same over-represented 100,000 clones.

Loss of High-Potential Hits: The remaining 900,000 unique variants are severely underrepresented or completely absent. The best potential binder may be in this suppressed group, but it will be outcompeted simply because it starts with a massive numerical disadvantage.

False Convergence: Your screen will quickly appear to "converge" on the over-represented clones, not because they are the best binders, but because they dominated the starting population.

A Practical Workflow for NGS Validation

Sample Preparation: Take a representative sample of your plasmid DNA library before transformation. This ensures you are measuring the quality of the library itself, not any growth biases from biological amplification.

Amplicon Generation: Use a high-fidelity DNA polymerase to amplify the variable region of your library. Crucially, use the minimum number of PCR cycles required (typically 10-15 cycles) to add sequencing adapters. Over-amplification is a primary source of introducing bias.

Sequencing: Use an Illumina platform (MiSeq or NextSeq) with paired-end reads that are long enough to fully cover your diversified region. Aim for a read depth that is at least 100x your theoretical diversity to ensure you capture low-frequency variants.

Bioinformatic Analysis: Process the raw sequencing data to calculate the key metrics. This involves:

Merging paired-end reads.

Filtering out low-quality reads.

Identifying and trimming constant flanking regions.

Translating DNA sequences to amino acid sequences.

Counting unique variants and their frequencies to assess valid read percentage, observed diversity, and library uniformity.

Advanced Workflow: Handling Diversified Regions Longer Than a Single Read

The workflow above is ideal for libraries where the diversified region is less than ~500 bp, which is easily spanned by a 2x300 bp Illumina run. What if your region is longer, such as a full-length scFv (~750 bp)? In this case, you cannot sequence the full variant with standard methods. Here are two common approaches.

1. Tiling Amplicons with Short-Read Sequencing This method uses Illumina sequencing but breaks the problem into smaller pieces.

Method: Design multiple primer sets to generate overlapping amplicons that "tile" across your entire diversified region. For an scFv, you might have one amplicon for the VH domain and another for the VL domain. You then sequence these pools separately.

Pros: Cost-effective, high-throughput, and uses well-established bioinformatic pipelines. It provides excellent quantitative data on the diversity, mutation rate, and uniformity within each sub-region.

Cons: You lose linkage information. You can confirm the diversity of your VH and VL domains independently, but you cannot determine which specific VH is paired with which specific VL in the original, full-length molecule. This is a significant drawback if combinatorial pairing is part of your library design.

2. Full-Length Analysis with Long-Read Sequencing This method provides a complete picture by sequencing the entire molecule in one go.

Method: Use a long-read sequencing technology like PacBio HiFi or Oxford Nanopore. These platforms can generate reads that are thousands of base pairs long, easily covering a full-length scFv or other large constructs.

Pros: Preserves complete linkage information. You get the exact, full-length sequence for every variant molecule. This allows for a true assessment of full-length diversity and uniformity, which is the gold standard for library QC. The accuracy of platforms like PacBio HiFi is now on par with short-read methods.

Cons: Historically has had a higher cost-per-read and lower throughput than Illumina. This means a long-read sequencing approach will only be technically feasible with a low diversity library.

Conclusion: An Essential Investment

NGS-based library validation is an essential quality control step for any serious screening campaign. It provides a quantitative baseline of your starting population, allowing you to diagnose problems early and interpret downstream enrichment data with confidence. Investing in a deep sequencing analysis before you begin screening can save you months of troubleshooting and is the first—and most important—step towards a successful discovery.